Imagine a world where an advanced and trusted AI system possesses the power to catch criminals as soon as they commit a crime or a country breaks an international agreement. We see a glimpse of this in the form of satellite imagery analysis, which gives people the ability to track the different changes that occur to the earth’s surface. This ability allows different actors (good, neutral, and bad) to have increased surveillance opportunities, which introduces the concept of Responsible AI.

As an AI ethicist specializing in Responsible AI, I understand how essential it is for society to contemplate the consequences of developing such highly advanced technology and ensuring society develops AI responsibly. As Stephen Hawking once said, "Success in creating AI would be the biggest event in human history. Unfortunately, it might also be the last unless we learn how to avoid the risks."

Specific to banking, Responsible AI has a major role to play in eliminating biases and hallucinations while enabling transparency. The future of AI in banking, credit unions and beyond depends on the actions of AI engineers, policy-makers, and similar leaders to ensure a safe, responsible coexistence with AI. It all starts with understanding the principles and values associated with Responsible AI.

What is Responsible AI?

Responsible AI encompasses the approach of developing AI in a manner that is accountable, ethical, fair, trustworthy, and transparent. The objective is to harness the benefits of AI for society while minimizing potential risks and harm. There are three buckets of fundamental principles associated with Responsible AI: ethics, explainability, and governance, each with associated values underneath, as shown in the figure below.

Principle #1: AI Ethics

This branch of Responsible AI centers on the development and use of AI technologies, considering their implications, risks, and impacts. Some example topics that interest AI Ethicists are privacy, bias and discrimination, and job displacement.

Some common values associated AI Ethics include:

- Accountability: Taking responsibility for the AI system’s actions. If an algorithm creates a failure, the individuals who develop the AI system must take accountability for the mistake rather than blaming the algorithm.

- Advocacy: Actively advocating for ethical AI practices and raising awareness about associated principles and challenges. Who is going to seek change and protection if no one is speaking about the challenges? By advocating for AI Ethics, you are changing the system.

- Bias Mitigation: Implementing strategies to eliminate biases within an AI system. To limit bias, it is important to consider what problematic data you are exposing your algorithm too. By removing problems, the biases will be gone.

- Education/Training: Educating and preparing those using the AI system for ethical considerations related to AI. The best way to ensure Responsible AI is to train others about the concepts involved.

- Environmental Impact: Considering the environmental consequences of AI development and use. Generating models requires energy, leaving behind a carbon footprint as well as generating e-waste.

- Fairness: Ensuring that AI systems are developed and used in a way that avoids bias, discrimination, and unequal treatment. This is a broader concept than Bias Mitigation, encompassing ethical considerations throughout the entire AI lifecycle.

- Freedom: Preserving and protecting individual and societal freedoms and rights. Freedom involves giving users the choice to use AI and being able to opt in or out at any point.

- Privacy: Protecting individuals' rights to control their personal information and data. Privacy involves the data that is collected on an individual and how it is used to inform an algorithm model. It's important to give people the ability to protect what they're viewing and what they're doing, such as with their finances.

- Risk Management: Identifying, assessing, and mitigating the ethical, legal, and societal risks associated with AI. This means an algorithm should not endanger a person's identity or cause any risks to them.

- Transparency: Making AI systems understandable and accessible to users and stakeholders. A person should be able to understand how a decision is made when discussing information with it.

Principle #2: Explainability

Making AI explainable means understanding how the model was designed and how it makes decisions. An example of algorithmic transparency is explaining how a patient's diagnosis was determined in a healthcare setting.

Some common values associated with Explainability include:

- Advocacy: Making AI decision-making processes understandable and transparent to the public. While the type of advocacy described under AI Ethics focused on those who work in the industry, Advocacy here specifically pushes to allow the public to understand how AI algorithms work.

- Transparency: The quality of an AI system being open and understandable, allowing others to comprehend how the AI system makes its decisions. This means the public is able to understand why a decision was made.

Principle #3: Governance

Governance refers to the framework, policies, and practices in place to supervise the development and use of AI. An example of this is President Biden’s Executive Order on Safe, Secure, and Trustworthy Artificial Intelligence.

Some common values associated with Governance include:

- Accountability: Establishing practices and policies that hold entities responsible for the development and use of their AI systems. This creates responsibility guidelines on a national or international level rather than on a company basis.

- Advocacy: Influencing the development of frameworks and policies related to AI. This is specifically directed at regulating bodies to work together to create policies.

- Compliance: Adhering to relevant laws, regulations, and guidelines in the development and use of AI. This ensures that the AI developers comply with all forms of regulation in the development of the AI algorithm to protect users

- Data Governance: Practices for managing data used in the development and use of AI in a responsible, ethical, and compliant manner. This allows companies to develop healthy practices when it comes to managing critical information, such as any kind of identifiable information.

- Monitoring: The ongoing process of systematically overseeing the development and use of AI. This refers to the process of ensuring that the algorithm is working as intended.

- Risk Management: This refers to the process of creating policies to ensure that risks (such as ethical, legal, technical, and social) are mitigated.

- Security: Comprehensive measures and practices to protect AI from potential threats, vulnerabilities, and unauthorized access. Making sure unauthorized users can't access the algorithm is critical to protecting your users.

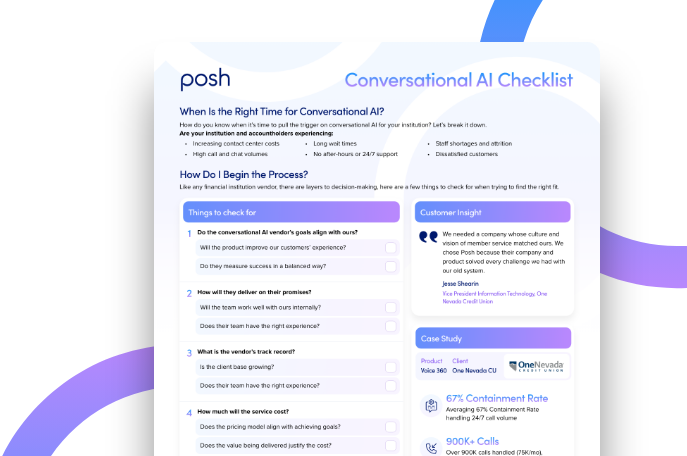

Why Responsible AI Matters to Posh

Posh places great importance on Responsible AI, evident through our guiding principles: "See something, do something; Trust by default; Follow the data; Serve the user; Ensure a quality foundation; Tolerate ambiguity; and Invest in each other." These principles guide our company in advocating for the FinTech community. A prime example is our Privacy efforts, which invokes Serve the User, the practice of ensuring we are making for better customer experiences. We employ a privacy-by-design approach to ensure that we use alternative identifiers (for example changing a PIN number to ****), preventing the identification of individuals as data subjects (a phone number to a Chat Log ID).

Another illustration of the implementation of Responsible AI at Posh is evident in our approach to empowering Client Contact Center Agents, which relies on our principle to Follow the Data. Our objective is to leverage conversational AI as a supportive tool to mitigate common stressors experienced by agents. This involves addressing a range of tasks, including responding to frequently asked questions, facilitating discussions on banking transactions, and enhancing overall knowledge of the contact center operations. We are committed to positioning ourselves as collaborative partners and a tool in this endeavor, aiming to provide assistance rather than merely serving as a mechanism for job automation and elimination.

Responsible AI Policies and Resources

As more groups actively work towards AI development, it has become imperative for companies to assume the role of creating Responsible AI. Major players, such as Apple, Accenture, Google, and Microsoft have taken significant steps in this direction by creating their own Responsible AI Guidelines. Posh, like other purpose-built AI companies, is looking to major enterprises like these to guide decisions around Responsible AI guidelines while also engaging with financial industry leaders within current technology platforms, the banking sectors, and regulators. With all these different insights and viewpoints in mind, Posh is thoughtfully and carefully building products that are risk-averse and maintain Responsible AI approaches.

If you are on the path to becoming an advocate for Responsible AI, the following resources can assist you in your journey:

Author Kylie Leonard is a Conversational AI Designer at Posh. She holds a Doctorate in Technology degree with a focus in Responsible AI from Purdue University. In her spare time, she enjoys her family and friends, watching reality tv, reading SciFi and fantasy, and taking photos of her cat, Jewel.

Blogs recommended for you

March 18, 2024

The Future of Financial Cybersecurity: Protecting Consumer Data in the Age of AI

Read More

The Future of Financial Cybersecurity: Protecting Consumer Data in the Age of AI

October 31, 2023

How Posh Mitigates Risk for Our Banking Partners

Read More

How Posh Mitigates Risk for Our Banking Partners

December 5, 2023

How Posh Secures Critical Documentation in Knowledge Assistant

Read More

How Posh Secures Critical Documentation in Knowledge Assistant

Event -

3 Fundamental Practices of Responsible AI

Are you attending and interested in learning more?

Register today

Visit event page to learn moreEmail info@posh.ai for the recording!

November 29, 2023

6:06 pm

Virtual event

Event Details

Speakers

No items found.